This post is part of the Fantastic F# Advent Calendar 2019 that has become a Christmas tradition over the years to bring the F# community together to celebrate the holiday season. A special thank you goes to the organizer, Sergey Tihon (@sergey_tihon) who is keeping this tradition vibrant and magical.

The goal: Distribute the work across multiple machines using Akka.net and Docker

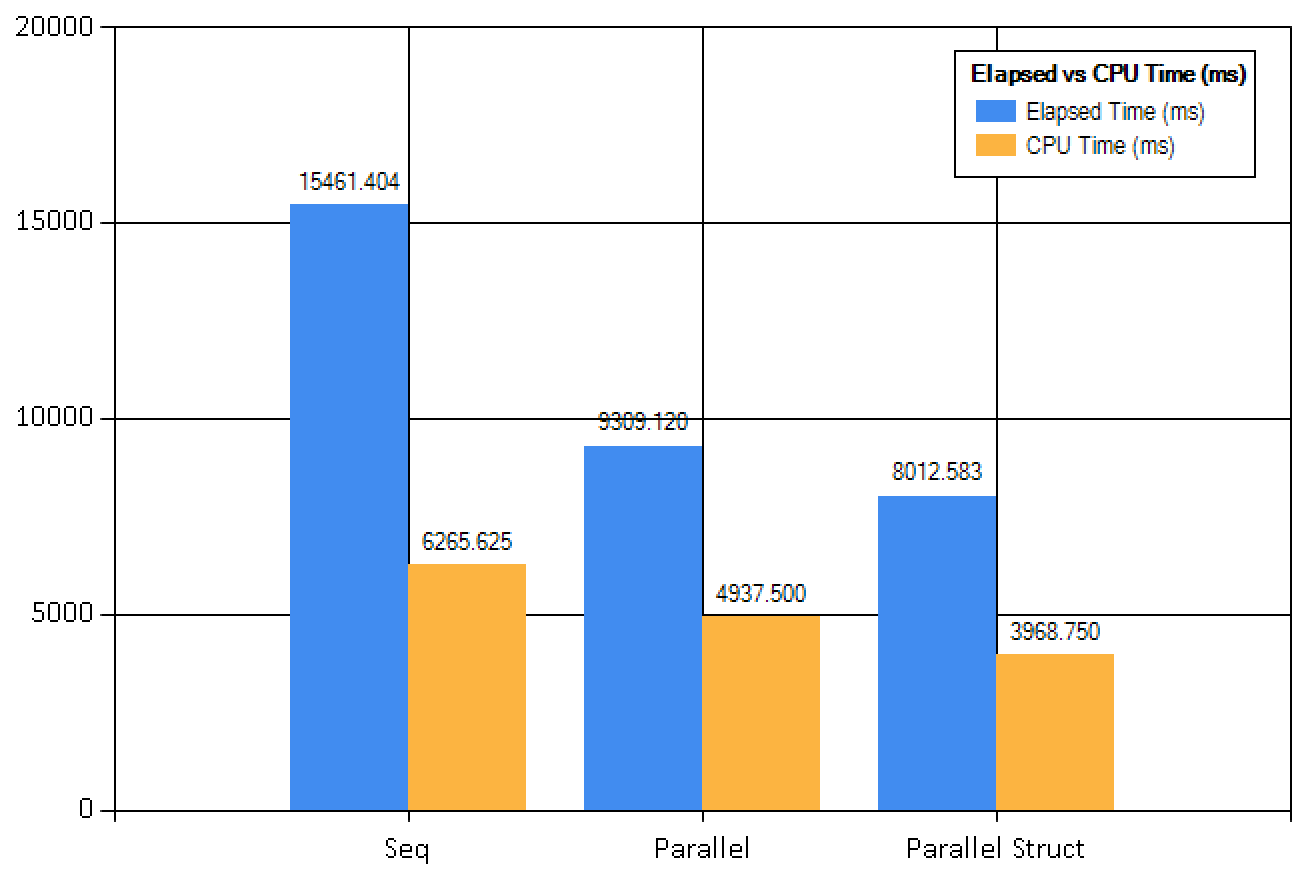

The goal of this project is to demonstrate how to implement a program that parallelizes an algorithm by distributing the work across machines, we will emulate the network distribution using Docker containers. The distribution will be done in a reliable, resilient, and fault tolerant fashion. This kind of design is also called “reactive architecture”, which refers to a system that remains responsive even if the system is under a significantly increased load.

For the sake of the demonstration, we are implementing a program that aims to process a big set of image blocks (tiles) to compose a large fractal image. The idea is to create a huge number of tiles from an empty larger image, and then transform each tile individually and in parallel. Then, we are positioning the tile back to the original location when its rendering is completed. When all the tiles have been processed and put back together, a large fractal (Mandelbrot) image is formed.

Because of the parallel nature of this program, we will see that the forming of the fractal image happens in pieces that are appearing concurrently, like a solving puzzle. For example, let’s say that we want to generate a Mandelbrot sized with both the width and height of 4000. When we run the program, a blank square image of this size will be split into small squares for processing. The 4000 square will split into about 40000 squares with side size of 20, which then are processed individually in parallel.

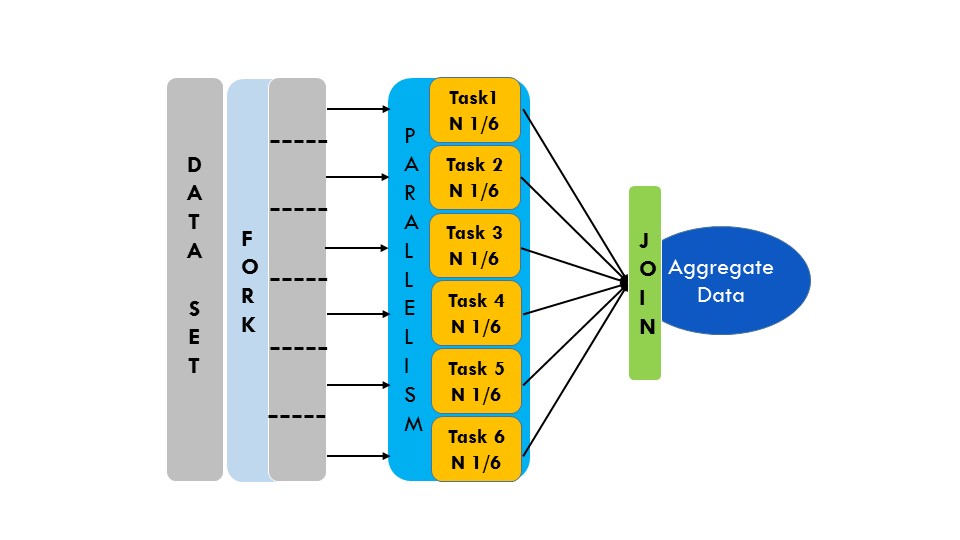

In general, to improve the performance of a program that runs in a multicore machine, we take a problem that can be broken in smaller parts that can run independently and in parallel, and then re-join the single results to solve the original larger problem. Algorithms like this can be identified as divide-conquer.

NOTE: The Divide and Conquer pattern solves a problem by recursively dividing it into subproblems, solving each one independently, and then recombining the sub-solutions into a solution to the original problem.

The first step in designing any parallelized system is decomposition. Decomposition is nothing more than taking a problem space and breaking it into discrete parts. When we want to work in parallel, we need to have at least two separate things that we are trying to run. We do this by taking our problem and decomposing it into parts.

Parallelizing a Divide and Conquer algorithm is interesting; however, we will bring the parallelization to the next level, because we will parallelize the computation distributing the jobs across multiple processes (or machines). Concurrent processing makes the most of the multiple cores and threading capabilities of modern processors, but you are still limited by what you can accomplish on one machine.

How can we distribute the jobs across multiple computers seamlessly? How can we guarantee that we won’t lose any jobs in the case of error or network failure?

The answer: Actor programming model.

Over the past 10 years, I have been implementing and/or architecting applications to improve performance by distributing the work cross-processes and consequentially, across machines. Microsoft has made it easier for developers over the years, to leverage the hardware resources to parallelize a program computation. For example the TPL (Task Parallel Library), the async-await programming model in C#, the async-workflow in F#, and the TPL Dataflow, all assist developers in these practices.

Despite the benefits those libraries provide in terms of easy to use concurrent programming models and simple ways to increase the performance of a program, they all focus on local resources, which means that the speed increase is limited to the local computer. In order to further increase the performance using those libraries, you must add more hardware resources. This is also called “scaling up” to increase local resources; for example, existing CPUs available on servers.

NOTE: Scalability is the measure to which a system can adapt to a change in demand for resources, without negatively impacting performance. Concurrency is a means to achieve scalability: the premise is that, if needed, more CPUs can be added to servers, which the application then automatically starts making use of.

In opposition to “scaling up” there is “scaling out” a system, which refers to dynamically adding more servers to a cluster. This is what we are going to discuss in this blog. We are going to implement an application with the goal to distribute a parallel work across multiple machines. (We will use docker to run multiple images to virtualize the machines locally).

For the parallel distribution of the work, Akka.NET fits quite well. In particular, Akka.NET Clustering. Indeed, the process of Scaling out a system, perhaps to thousands of machines, is not a trivial development, distributed computing is hard… notoriously hard!

The good news is that Akka.NET will give you some really nice tools to make your life in distributed computing a little easier. Once again, Akka doesn’t promise a free lunch, but just as actors simplify concurrent programming, you’ll see that they also simplify the move to truly distributed computing.

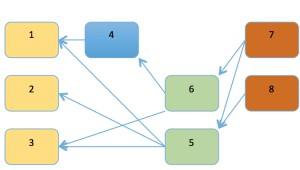

This is the high-level the design of the solution we are going to implement. Each square represents a different dockerized process.

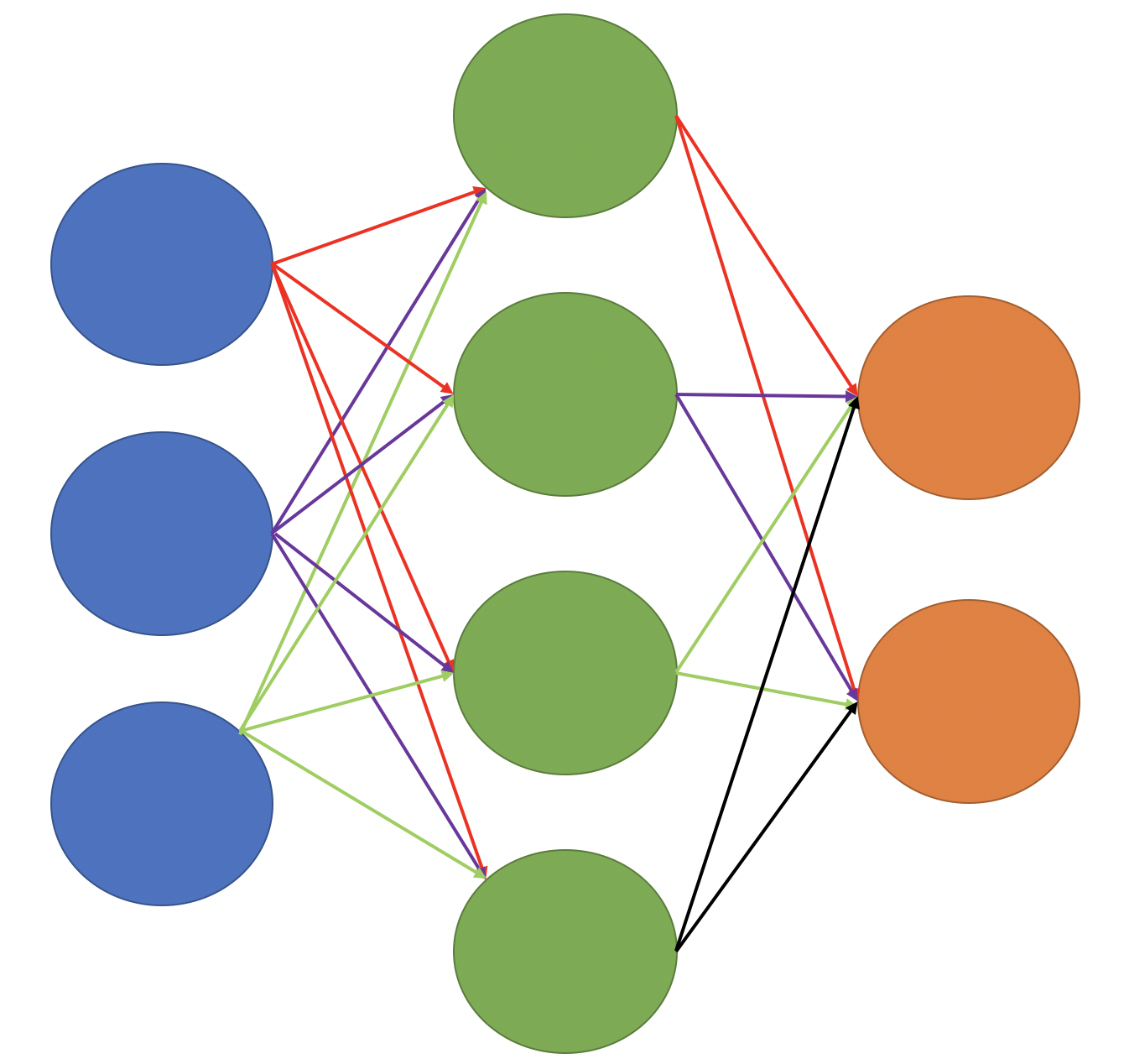

Before jumping into Akka.NET, we have to introduce the main pillar of its foundation: The Actor Programming model.

Why Actor Programming model?

We can program multithreading in almost all the languages today. The challenge remains how to share the state! Programming multithreaded applications in a traditional way is very difficult, so, you might start to use lock, mutex, semaphore and so on to protect mutable share of state; but, how can you tell the lock is in the right place? There is no compiler or debugging tool that can detect a problem with a lock. Furthermore, as the name suggests, locking prevents the program from truly running in parallel. It protects the system from crashing because the state cannot be corrupted. Locking has a cost, there are some performance penalties in calling a “Lock” every time for something that you might need to avoid only once every 10000 times.

In addition, lock-based programs do not compose. You cannot take two atomic operations and create one more atomic operation out of them.

What is the Actor Programming model?

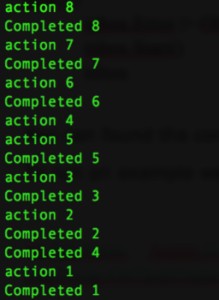

An Actor is a single-thread unit of computation used to design concurrent applications based on message passing in isolation (share-nothing approach). These actors are lightweight constructs that contain a queue and can receive and process messages. In this case, lightweight means that actors have a small memory footprint as compared to spawning new threads, so you can easily spin up 100,000 actors in a computer without a hitch.

An Actor consists of a mailbox that queues the income messages, a state, and a behavior that runs in a loop, which processes one message at a time. The behavior is the functionality applied to the messages

The actor model is one of the primary architectural constructs that help you design your model based on asynchronous, event-driven, and nonblocking communication. The sender sends messages based on the fire-and-forget style, and the receiver’s mailbox gets them for further processing. The primary takeaway of this model is that you don’t have to handle concurrency within your code.

You can think of actors like very light programmable message queues on steroids. Those queues are very light in terms on memory footprint, so that you can easily create thousands, even millions of them with minimum impact to the memory consumption. This is because Actors are reactive, they don’t “do” anything unless they’re sent a message. Actors can receive messages one at a time and execute some behavior whenever a message is received. Unlike queues, they can also send messages (to other actors). Messages are simple data structures that can’t be changed after they’ve been created, or in a single word, they’re immutable.

Some actor implementations such as Akka.NET offer features such as fault tolerance, location transparency, and distribution. In this project, the parallelization of the Fractal algorithm is achieved by distributing the work across machines in the network using Akka.NET actors that form a cluster.

What is Akka.NET

Akka.NET is a port of the popular Java/Scala framework Akka to .NET platform. It is a toolkit to build concurrent, resilient, distributed and scalable software systems. Akka aims to simplify the implementation of asynchronous and concurrent tasks based on Actor model, whose goal is to achieve better performance results with a simple programming model.

Why is Clustering

Murphy’s Law sates, “Anything that can go wrong, will go wrong. ” And computers and distributed systems are definitely included. You will have a fault on your production servers at some point in time, whether that be a software crash, a power cut or a network failure. It’s important that your system can handle issues without causing any downtime to your customers. You get this reassurance for free with Akka. NET clustering. Clustering offers many benefits build your very own concurrent distributed system, which is fault-tolerant and can easily scale up or down to meet requirements.

What is Clustering

A cluster is a dynamic group of nodes. On each node is an actor system that listens on the network. Clusters build on top of the networking abstraction provided by Akka.Remote. Akka Remoting is a communication module for connecting actor systems in a peer-to-peer fashion. An Akka Cluster is incredibly useful for scenarios in which you need high availability guarantees that you can’t achieve by using a single machine to host an actor system.

Here are some examples of high availability scenarios that come up often where using clustering is beneficial:

1. Analytics

2. Marketing Automation

3. Multiplayer Games

4. Devices Tracking / Internet of Things

5. Recommendation Engines

6. And more

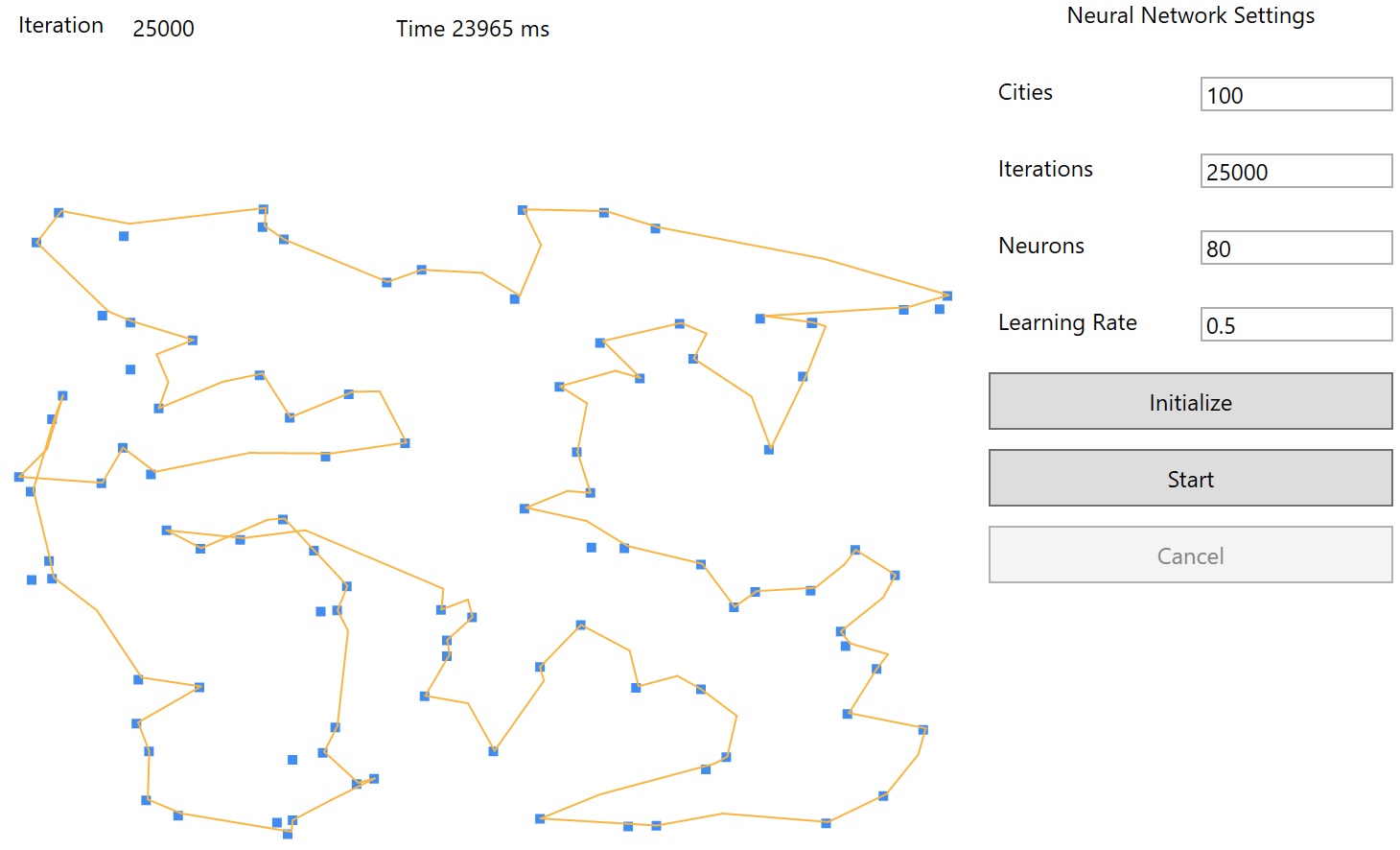

Implementation – Project details

The project is a web base application that uses Web-Sockets to update concurrently as an HTML-Canvas with tiles that have been processed to represent a portion of a large Fractal image. When all the tiles are re-positioned on the Canvas, the large Fractal image will be fully rendered.

The Web application is an Asp .NET Core with Giraffe. When we start the Fractal rendering, the Web Server notifies multiple running “Remote Actor Systems”, to start the work. These independent processes work in parallel and cohesively together to achieve the same goal as fast as possible.

The solution is composed in three projects:

- Akka.Fractal.Remote is a console project that initializes an ActorSystem, which runs the worker actor “tileRenderActor”. This actor is defined in the project Akka.Fractal.Common, and it is responsible for the image processing of the tiles to compose the Fractal image. This “tileRenderActor” actor is part of the cluster to process the image in a distributed fashion. This project is replicated for as many actor-system instances we want to run in different processes, and eventually in different machines across the network.

- Akka.Fractal.Server is the web-based component, which is responsible to initiate the image processing, and to render concurrently the Fractal image.

- Akka.Fractal.Common is a shared project that contains utilities such as image processing, common actor messages, logging and more. This project is referenced from both the other projects to access mutual functionality.

The Akka.Fractal.Server project implementation

Let’s start with the “Startup” section of this project, where the first thing that every Akka application does is create an ActorSystem. We use Dependency Injection (DI) built in Asp.NET Core to create a single instance of the Actor System, which can be accessed later using the IoC container. Then, we create a single instance of the “fractalActor”, that we use to start a new image processing.

let configureServices (services : IServiceCollection) =

let sp = services.BuildServiceProvider()

let env = sp.GetService<IHostingEnvironment>()

services.Configure<CookiePolicyOptions>(fun (options : CookiePolicyOptions) ->

options.CheckConsentNeeded <- fun _ -> true

options.MinimumSameSitePolicy <- SameSiteMode.None

) |> ignore

services.AddMvc().SetCompatibilityVersion(CompatibilityVersion.Version_2_1) |> ignore

services.AddCors() |> ignore

services.AddGiraffe() |> ignore

services.AddSingleton<ActorSystem>(fun _ ->

let config = ConfigurationLoader.load()

System.create "fractal" config ) |> ignore

services.AddSingleton<Actors.SseTileActorProvider>(fun provider ->

let actorSystem = provider.GetService<ActorSystem>()

let deploymentOptions =

[ SpawnOption.Router(FromConfig.Instance) ]

let tileRenderActor =

Akka.Fractal.Common.Actors.tileRenderActor actorSystem "remoteactor" (Some deploymentOptions)

Actors.SystemActors.TileRender <- tileRenderActor

let fractalActor = Actors.fractalActor tileRenderActor actorSystem "fractalActor"

Actors.SseTileActorProvider(fun () -> fractalActor)

) |> ignore

When the web-server starts, as part of the startup, we configure the IoC “Service Collection” to define the initialization of the “ActorSystem” as singleton

services.AddSingleton<ActorSystem>(fun _ ->

let config = ConfigurationLoader.load()

System.create "fractal" config ) |> ignore

The “ActorSystem” can create so called top-level actors, and it’s a common pattern to create only one top-level actor for all actors in the application. When we “create” the ActorSystem, a configuration is loaded from the local “akka.conf” file, which contains an HOCON style configuration. This file contains details of how to set and run the cluster, the deployment options for the actors, logging information, and more.

akka {

log-config-on-start = on

stdout-loglevel = DEBUG

loglevel = DEBUG

actor {

provider = "Akka.Cluster.ClusterActorRefProvider, Akka.Cluster"

debug {

receive = on

autoreceive = on

lifecycle = on

event-stream = on

unhandled = on

}

deployment {

/remoteactor {

router = round-robin-pool

nr-of-instances = 8

cluster {

enabled = on

max-nr-of-instances-per-node = 5

allow-local-routees = on

use-role = fractal

}

}

}

}

remote {

dot-netty.tcp {

port = 0

hostname = localhost #"0.0.0.0"

}

}

cluster {

seed-nodes = ["akka.tcp://fractal@lighthouse:4053"]

roles = [fractal]

}

}

HOCON stands for Human-Optimized Config Object Notation, which is a format for human-readable data, and a superset/combination of XML and JSON. Using this configuration file, we are setting the provider “ClusterActorRefProvider” to enable the Akka Clustering feature. Then, in the “Deployment” section, we configure the cluster/routing settings that will be applied to every actor whose name matches the “remoteactor” (and that loads the configuration from this file). As you can already figure by the name, this configuration is targeting the remote actor that will be deployed from the web project. Ultimately, the “cluster” section sets the list of seed-nodes, which are used to establish the mesh connections between all the nodes. A seed node is a system with a well-known, persistent address, that all of the systems in your cluster can connect to. For this reason, we are explicitly setting the address here in the “Cluster” section.

seed-nodes = ["akka.tcp://fractal@lighthouse:4053"]

A seed node can be absolutely any ActorSystem that has clustering enabled. Therefore, seed nodes can be any node in your application, but it is recommended that you have a dedicated seed node with no function other than being a seed node. If a seed node goes down or becomes unreachable, your cluster will continue to operate, but new nodes will not be active until all seed nodes are back up again.

With that in mind, there’s an open source dedicated seed node project called Lighthouse, which is a simple, but effective dedicated seed node. You can deploy as many as you like alongside your app and have your actor systems point at those. Once it’s there, it will never need to be modified unless you want to reconfigure it or there is a new version that you need. Lighthouse is basically a stub of an Akka.NET ActorSystem with cluster capabilities. Later, when we run the application, we will simply create a Docker image to run the Lighthouse seed node.

The ”Tile Render Actor”

In the web server “Startup”, when we register the singleton factory for the “fractalActor”, we are first creating an instance of the “tileRenderActor”, which is defined in the “Akka.Fractal.Common” project. This actor is then passed into the “fractalActor” constructor to provide the actor reference where to send the messages for the Fractal image processing.

let deploymentOptions =

[ SpawnOption.Router(FromConfig.Instance) ]

let tileRenderActor =

Akka.Fractal.Common.Actors.tileRenderActor actorSystem "remoteactor" (Some deploymentOptions)

let fractalActor = Actors.fractalActor tileRenderActor actorSystem "fractalActor"

Actors.SseTileActorProvider(fun () -> fractalActor)

to create an Actor instance we need: the current ActorSystem reference, an arbitrary name of the “Actor” (in this example “remoteactor”), and the deployment options. As previously mentioned, we are loading the configuration for this actor from the local config file “akka.conf”, where the settings in the “deployment” section matches the name of the Actor.

Next, the “fractalActor” is registered as a singleton instance that we can retrieve by calling the associated delegate “Actors.SseTileActorProvider“

services.AddSingleton<Actors.SseTileActorProvider>(fun provider ->

let actorSystem = provider.GetService<ActorSystem>()

In this way, we use DI as the “Controller” constructor level to get the reference of this delegate, then we can invoke it on demand to access the instance of the actor “fractalActor”.

let sseTileActor = Actors.fractalActor tileRenderActor actorSystem "fractalActor"

Here the giraffe web-part “startFractal” where we get and invoke the delegate “SeeTileActorProvider”.

let startFractal =

fun (next : HttpFunc) (ctx : HttpContext) ->

task {

let actorProvider = ctx.GetService<Actors.SseTileActorProvider>()

let actor = actorProvider.Invoke()

let command = Akka.Fractal.Common.Messages.FractalSize(4000,4000)

actor.Tell command

printfn "Sent Command RenderImage"

return! json "OK" next ctx

}

After the reference of the actor “actorFractal” instance is retrieved, the process starts by sending the message of the fractal size. In this case, for simplicity, the value is hard-coded to 4000.

Here we create the “command” message and send it, or Tell, to the “fractalActor”.

let command = Akka.Fractal.Common.Messages.FractalSize(4000,4000) actor.Tell command

Now the fractal image processing starts. Here below is the full implementation of the “fractalActor”

type SseTileActorProvider = delegate of unit -> IActorRef

let fractalActor (tileRenderActor : IActorRef)

(system : ActorSystem) name =

spawn system name (fun (mailbox : Actor<Messages.FractalSize>) ->

let rec loop () = actor {

let! request = mailbox.Receive()

let split = 20

let ys = request.Height / split

let xs = request.Width / split

let renderActor =

if mailbox.Context.Child("renderActor").IsNobody() then

Logging.logInfo mailbox "Creating child actor RenderActor"

renderActor request.Width request.Height split mailbox.Context "renderActor"

else mailbox.Context.Child("renderActor")

for y = 0 to split - 1 do

let mutable yy = ys * y

Logging.logInfof mailbox "Sending message %d - sseTileActor" y

for x = 0 to split - 1 do

let mutable xx = xs * x

tileRenderActor.Tell(Messages.RenderTile(yy, xx, xs, ys, request.Height, request.Width), renderActor)

return! loop ()

}

loop ())

To use Akka.NET in an idiomatic functional and F# way, we are using the Akka.NET FSharp module (nuget package Akka.FSharp) that provides several useful functions, such as the “spawn” function used in the previous code to create an instance of the actor “fractalActor”. Below is the “spawn” function definition

- spawn (actorFactory : IActorRefFactory) (name : string) (f : Actor<‘Message> -> Cont<‘Message, ‘Returned>) : IActorRef – spawns an actor using a specified actor computation expression.

This function takes a lambda with the argument type `Actor<Messages.FractalSize>`, which strongly types the message type that the actor receives. The message type FractalSize is defined in the “Akka.Fractal.Common”. When the message is received, we use the size of the Fractal to generate the set of sub-squares and the correlated messages. These messages “RenderTile” contain the size and coordinate of each tile, the coordinate will be used to reposition the block in the correct place to compose the Fractal image.

Next, we iterate through those messages in a for/loop to send them to the “tileRenderActor” remote actor, which will create the partial representation of the Fractal based on the coordinate of the tile.

When a tile is completed, it is sent back to the web server to update the UI. To simplify the UI rendering, we define a different actor “renderActor”, which has the purpose to convert the messages received into a JSON representation. Then the JSON message is sent to update the Fractal Image using the underlying web socket connection.

let renderActor (width : int) (height : int)

(split : int) system name =

spawnOpt system name (fun (inbox : Actor<Messages.RenderedTile>) ->

let ys = height / split

let xs = width / split

let rec loop (image : Image<Rgba32>) totalMessages = actor {

let! renderedTile = inbox.Receive()

Logging.logInfof inbox "RenderedTile X : %d - Y : %d - Size : %d" renderedTile.X renderedTile.Y (renderedTile.Bytes.Length)

let sseTile = Messages.SseFormatTile(renderedTile.X, renderedTile.Y, Convert.ToBase64String(renderedTile.Bytes))

let text = JsonConvert.SerializeObject(sseTile)

Middleware.sendMessageToSockets text

return! loop image (totalMessages - 1)

}

loop (new Image<Rgba32>(width, height)) (ys + xs))

[ SpawnOption.SupervisorStrategy (Strategy.OneForOne (fun error ->

match error with

| _ -> Directive.Resume )) ]

The interesting part of the “fractalActor” actor is where an instance of the “renderActor” actor is either created as a children actor, or if it already exists, the same instance is retrieved. In this way we ensure that we have only one running actor of this type.

let renderActor =

if mailbox.Context.Child("renderActor").IsNobody() then

Logging.logInfo mailbox "Creating child actor RenderActor"

renderActor request.Width request.Height split mailbox.Context "renderActor"

else mailbox.Context.Child("renderActor")

Next, we need to notify the remote actor “tileRenderActor” to send back the response to the “renderActor” when a tile image is ready. To achieve this pattern, which creates continuous message passing between actors, we simply add the reference of the of the “renderActor” as part of the payload of the message we are sending.

tileRenderActor.Tell(Completed Messages.Completed.instance, renderActor)

In this way, the receiver actor “tileRenderActor” interprets that the “Sender” actor as the “renderActor”, which is then targeted as the reference of where to send the responses. If we don’t use this override, the “Sender” actor is by default the “fractalActor”. This is a very useful pattern that allows to construct easily sophisticated communication topologies.

NOTE: here more info about the “don’t ask tell pattern”

The Akka.Fractal.Remote project implementation

The third project, the “Akka.Fractal.Remote”, is the heavy worker of this solution.

This project is a Console that runs an ActorSystem to spawn the actor “tileRenderActor” referenced from the Common project. Even if a “simple” Console project, there are few interesting things happening.

[<EntryPoint>]

let main argv =

Console.Title <- sprintf "Akka Fractal Remote System - PID %d" (System.Diagnostics.Process.GetCurrentProcess().Id)

let config = ConfigurationLoader.load().BootstrapFromDocker()

use system = System.create "fractal" config

Console.ForegroundColor <- ConsoleColor.Green

printfn "Remote Worker %s listening..." system.Name

printfn "Press [ENTER] to exit."

system.WhenTerminated.Wait()

0

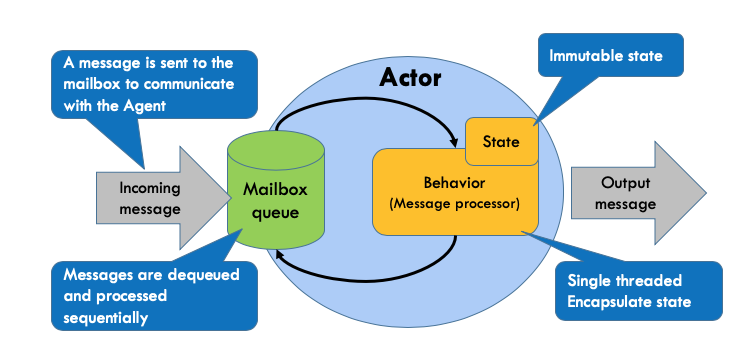

The actor “tileRenderActor” is deployed remotely to this project from the “Akka.Fractal.Server” project. The remote deployment is initiated when we instantiate the “fractalActor”. The deployment uses the configuration from the “akka.conf” file, which sets the actor routing with a “Round-Robin” strategy to balance the work across 8 routees.

/remoteactor {

router = round-robin-pool

nr-of-instances = 8

cluster {

enabled = on

max-nr-of-instances-per-node = 5

allow-local-routees = off

use-role = fractal

}

Each of this routees function as a “copy” of the “tileRenderActor” actor, but this is all transparent from the caller/sender of the message. In fact, we send the messages to this actor like any other actor using the “Tell” command. It is the routing strategy that distributes the work in parallel among the underlying actors (routes). Then the router does the message distribution.

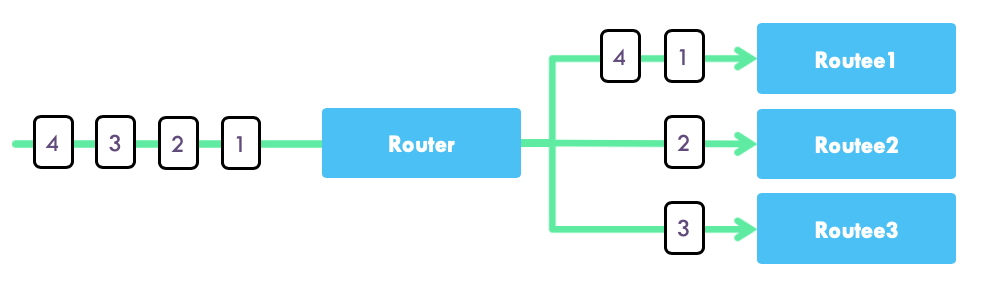

The Round-Robin is a strategy where messages are distributed to each actor in sequence, which is good for even distribution. In Akka.NET, routers are used to spawn multiple instances of one actor so that work can be distributed and load balance between them. This router creates its own actors; you provide a number of instances to the router and it will handle the creation by itself.

In the “Akka.Fractal.Remote” console project, when the ActorSystem is initialized, its configuration is loaded from the local “akka.conf” file in combination with the “BootstrapFromDocker” extension, which will be useful later when running the solution with “Docker-Compose”. In fact, this extension allows you to effortlessly inject some arbitrary environment-variables to the Docker images. The only thing that has to change is how the reference to remote actors is looked up, which can be achieved solely through configuration. The code stays exactly the same, which means that we can transition from scaling up to scaling out without having to change a single line of code. For example, we will use these variables to execute multiple instances of the same docker image with different ports exposed.

Here is the implementation of the “tileRenderActor” actor:

let tileRenderActor (system : ActorSystem) name (opts : SpawnOption list option) =

let options = defaultArg opts []

Spawn.spawnOpt system name (fun (inbox : Actor<Messages.RenderTile>) ->

let rec loop count = actor {

let! renderTile = inbox.Receive()

let sender = inbox.Sender()

let self = inbox.Self

Logging.logInfof inbox "TileRenderActor %A rendering %d, %d" self.Path renderTile.X renderTile.Y

let res = mandelbrotSet renderTile.X renderTile.Y renderTile.Width renderTile.Height renderTile.ImageWidth renderTile.ImageHeight 0.5 -2.5 1.5 -1.5

let bytes = toByteArray res

let tileImage = Messages.RenderedTile(renderTile.X, renderTile.Y, bytes)

sender <! tileImage

return! loop (count + 1)//

}

loop 0)

options

When the Actor receives a message type “RenderTile”, it processes the image using the function “mandelbrotSet” passing along the size and coordinate of the tile. Then the resulting image is converted into a byte array format, which is sent back to the sender with the tile original coordinates

let tileImage = Messages.RenderedTile(renderTile.X, renderTile.Y, bytes) inbox.Sender() <! tileImage

As previously mentioned, the sender address reference is sent as part of the received message, which the “fractalActor” is informed to use the “renderActor” instead.

That’s it, when this project starts, there will be eight actors “tileRenderActor” running in parallel to cope the high volume of requests and to process all the messages received by load balancing the work.

What about running copies of same project in different processes (or machines)?

This is when Akka Cluster comes to play in order to coordinate all the jobs across the running nodes scaling out the work. We are using Docker to create an image for the “Akka.Fractal.Server”, multiple images of the worker project “Akka.Fractal.Remote”, and a seed node image of the Open-Source project “Lighthouse”.

The purpose of Docker is to provide an isolated environment for the applications running inside the container image. Thus, Docker is a perfect fit for our project. For more info see this link.

To execute all the Docker images, we are using a docker-compose.yml file, which is a YAML file that defines how Docker containers should behave when running. Docker-Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration

Using Compose is basically a three-step process:

- Define your app’s environment with a Dockerfile so it can be reproduced anywhere

- Define the services that make up your app in docker-compose.yml so they can be run together in an isolated environment

- Run docker-compose up and Compose starts and runs your entire app

Both the “Server” and “Remote” projects have a dedicate Dockerfile to define what goes on in the environment inside each container. In general, a Dockerfile sets how the image should access and expose resources. Here more details about Dockerfile.

Here an the Dockerfile for the web-server image.

FROM mcr.microsoft.com/dotnet/core/sdk:2.2 AS build-env WORKDIR /app COPY ./Akka.Fractal.Server/Akka.Fractal.Server.fsproj ./Akka.Fractal.Server/Akka.Fractal.Server.fsproj COPY ./Akka.Fractal.Common/Akka.Fractal.Common.fsproj ./Akka.Fractal.Common/Akka.Fractal.Common.fsproj COPY ./build.sh ./build.sh COPY ./paket.lock ./paket.lock COPY ./paket.dependencies ./paket.dependencies RUN ./build.sh COPY ./.paket/Paket.Restore.targets ./.paket/Paket.Restore.targets RUN dotnet restore ./Akka.Fractal.Common/Akka.Fractal.Common.fsproj RUN dotnet restore ./Akka.Fractal.Server/Akka.Fractal.Server.fsproj # Copy all source code to image. COPY ./Akka.Fractal.Server ./Akka.Fractal.Server COPY ./Akka.Fractal.Common ./Akka.Fractal.Common WORKDIR ./Akka.Fractal.Server/ RUN dotnet build Akka.Fractal.Server.fsproj -c Release RUN dotnet publish Akka.Fractal.Server.fsproj -c Release -o publish # Build runtime image FROM mcr.microsoft.com/dotnet/core/aspnet:2.2 WORKDIR /app COPY --from=build-env /app/Akka.Fractal.Server/publish/ . EXPOSE 80 EXPOSE 5000 ENTRYPOINT ["dotnet", "Akka.Fractal.Server.dll"]

Each of those Dockerfiles generate a Docker image with a full deployment of the targeting project, and expose the port to access it. For example, the “Dockerfile.web”, which targets the “Akka.Fractal.Server” project, exposes port 5000. Consequentially, when running the web server image, we can access the web application locally using the address “localhost:5000” and start the Fractal Image rendering.

The Docker compose ymal file

Here the Docker compose ymal file :

services:

lighthouse:

image: petabridge/lighthouse:latest

hostname: lighthouse

ports:

- '4053:4053'

- '9110:9110'

environment:

ACTORSYSTEM: "fractal"

CLUSTER_IP: "lighthouse"

CLUSTER_PORT: 4053

CLUSTER_SEEDS: "akka.tcp://fractal@lighthouse:4053"

#

akkafractal.web:

build:

context: .

dockerfile: Dockerfile.web

ports:

- '0:80'

- '5000:5000'

depends_on:

- akkafractal-worker-1

# - akkafractal-worker-2

- lighthouse

environment:

ASPNETCORE_ENVIRONMENT: local

ASPNETCORE_URLS: http://+:5000

CLUSTER_IP: "akkafractal.web"

CLUSTER_PORT: 0

akkafractal-worker-1:

build:

context: .

dockerfile: Dockerfile.remoting

ports:

- "0:9110"

depends_on:

- lighthouse

environment:

CLUSTER_IP: "akkafractal-worker-1"

CLUSTER_PORT: 0

The first service “lighthouse” creates and runs the image of the seed node lighthouse exposing the port 4053. This is very important, since the other nodes to become part of the cluster have to send a request to join the cluster to this “well-know” address.

The second service “akkafractal.web” runs the Dockerfile.web to create the image of the “Akka.Fractal.Server” exposing port 5000.

The next services are copies of the same image from the Dockerfile.remoting but with a different port exposed. For this example, we are running two copies of this image, however you can have as many as you like – just remember to use a different name! The port assignment is done auto-magically for you by the seed node “lighthouse”. In fact, when these nodes request to join the cluster, the seed node coordinates the port assignment.

Note: The sections “environment”, these are variables that are set independently to the docker images.

That’s it… now you can run “docker-compose up”, navigate to the “localhost:5000” and start the application.

May your Mandelbrot be Merry and bring you cheer this Holiday Season!